A global team of astronomers and machine learning researchers today announced the release of the Multimodal Universe – a groundbreaking 100 terabyte dataset that brings together hundreds of millions of leading astronomical observations in unprecedented detail and scale. AI methods have always thrived on large datasets; the Multimodal Universe is the largest dataset designed for AI models.

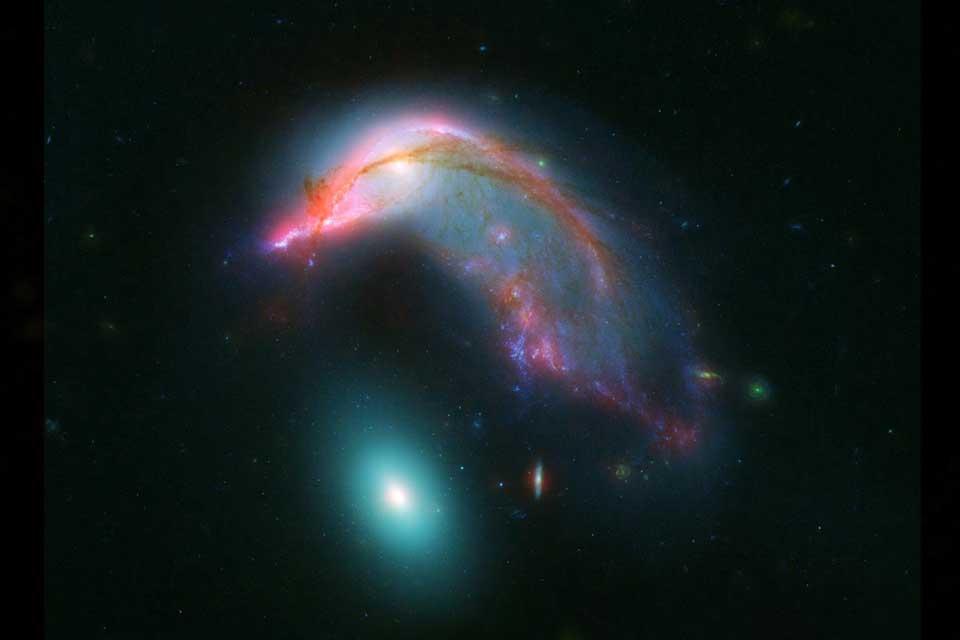

'Our work, from 25 institutes through 29 researchers, paves a path for machine learning to become a core component of modern astronomy,' says Polymathic AI member Dr Micah Bowles, a Schmidt AI in Science Fellow at the University of Oxford. 'Building the infrastructure for data is often a large expense in astronomy – this release, for the first time, enables large multimodal AI models in astronomy, which will undoubtedly become a core factor in how we access and handle our data. Our ability to undertake research programs into fundamental physics and our place in the universe will be faster than ever before. I look forward to the day that I can search all of our data finding my favourite penguin shaped galaxies and even the stars which flicker along with the beat of my favourite song in a matter of seconds rather than years.'

AI is famously data hungry and researchers can now feed it astrophysics data. With a lot of the data focussed on observations of galaxies, the resulting models are likely to be very effective in accelerating galaxy evolution research, where astronomers try to understand how galaxies have grown and developed since the Big Bang. Scientists consider the chemical composition, the ‘birth rate’ of stars, the formation and growth of black holes, and the structure of the galaxy alongside other markers for this research. This breadth of research naturally requires a breadth of observations – something the Multimodal Universe has in spades.

Astronomers have over decades pushed cutting edge technologies to their limits across many fields to be able to observe the universe and its constituent parts in multiple modes, such as near infrared light images with the James Webb Space Telescope (JWST) or measurements of exoplanets with the Transiting Exoplanet Survey Satellite (TESS). The Multimodal Universe combines observations from many of astronomy's most important surveys and telescopes, including the Dark Energy Spectroscopic Instrument (DESI), the Sloan Digital Sky Survey (SDSS), and and other major space- and ground-based observatories to enable new science across the field.

In total, this first release contains 100 terabytes of data. For comparison, you would have to listen to music for over 150 years (day and night) to stream that much music. Never before has there been a systematic public release of data across astronomical observations and surveys. The Multimodal Universe contains:

- over 120 million galaxy images

- more than 5 million stellar and galactic spectra

- light curves for over 3.5 million astronomical objects

- detailed measurements of nearly 220 million stars from ESA's Gaia satellite

- and compendia of downstream labels such as supernova and galaxy classifications.

'The Multimodal Universe makes accessing machine learning-ready astronomical datasets as easy as writing a single line of code,' says Helen Qu, a postdoctoral researcher at the Flatiron Institute. 'I'm excited to see how this can accelerate new developments in both astronomy and machine learning.'

Importantly, the data is being released in formats optimised for machine learning research. This is an important step to enabling broad applications of machine learning in astronomy, as until now each researcher would often (re)create their own datasets, which is a huge cost for small and large projects alike. Along with the data, the team is publishing some benchmarking results that demonstrate its potential applications, which range from classifying galaxies to better understand the evolution of galaxies, to improving early warning systems for supernova explosions so astronomers don’t miss unique events.

'One of Multimodal Universe’s key features is its ability to combine data from multiple astronomical surveys,' says Liam Parker, a PhD student at Berkeley and a group member at Polymathic AI. 'This will be critical as multimodal machine learning grows in popularity across the physical sciences.'

The Multimodal Universe is freely available to researchers worldwide through multiple access points, including Hugging Face. The team has also released extensive documentation and tools to help scientists work with the data effectively.

'We are witnessing a change of paradigm in the way AI is applied to astronomy and science in general,' says Marc Huertas-Company, research scientist at the Instituto de Astrofísica de Canarias. 'Supervised models trained for a specific task are being replaced by large multi-purpose models trained with large quantities of unlabelled and heterogenous data. The MMU dataset will play a key role in this transition.'

'By easing access to astronomical data, we hope to create new opportunities for cross pollination between astronomy and machine learning,' says Michael J. Smith, UniverseTBD member and Director of AI at Aspia Space. 'Open datasets like the Multimodal Universe will help the community build better, more transparent foundation models. This is essential as we move toward more sophisticated AI applications in astronomy.'

The data is already finding use in many of the contributors' astrophysics research. The Polymathic AI team itself is now using the datasets to train AI models. In the coming months, they will deploy these models on various tasks to see how successful these well-rounded, well-trained AIs are at tackling complex scientific problems.

The Polymathic AI initiative, https://polymathic-ai.org/, is led by researchers at the Simons Foundation and its Flatiron Institute, New York University, the University of Cambridge, Princeton University, the French Centre National de la Recherche Scientifique and the Lawrence Berkeley National Laboratory. It uses technology similar to that powering large language models such as OpenAI’s ChatGPT or Google’s Gemini. But instead of ingesting text, the project’s models learn using scientific datasets from across astrophysics, biology, acoustics, chemistry, fluid dynamics and more, aiming to give the models cross-disciplinary scientific knowledge.

The new dataset will be described at the leading computer science conference NeurIPS, to be held in Vancouver. For more information on the Multimodal Universe, see the paper, poster and video recording at https://neurips.cc/virtual/2024/poster/97791 or visit the project’s homepage at https://github.com/MultimodalUniverse.