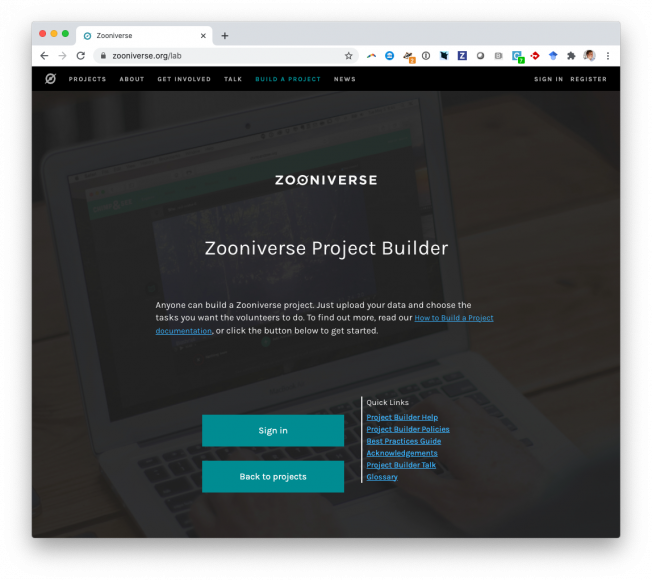

Measuring the conceptual understandings of citizen scientists participating in zooniverse projects: A first approach

Astronomy Education Review 12:1 (2013)

Abstract:

The Zooniverse projects turn everyday people into "citizen scientists" who work online with real data to assist scientists in conducting research on a variety of topics related to galaxies, exoplanets, lunar craters, and solar flares, among others. This paper describes our initial study to assess the conceptual knowledge and reasoning abilities of citizen scientists participating in two Zooniverse projects: Galaxy Zoo and Moon Zoo. In order to measure their knowledge and abilities, we developed two new assessment instruments, the Zooniverse Astronomical Concept Survey (ZACS) and the Lunar Cratering Concept Inventory (LCCI). We found that citizen scientists with the highest level of participation in the Galaxy Zoo and Moon Zoo projects also have the highest average correct scores on the items of the ZACS and LCCI. However, the limited nature of the data provided by Zooniverse participants prevents us from being able to evaluate the statistical significance of this finding, and we make no claim about whether there is a causal relationship between one's participation in Galaxy Zoo or Moon Zoo and one's level of conceptual understanding or reasoning ability on the astrophysical topics assessed by the ZACS or the LCCI. Overall, both the ZACS and the LCCI provide Zooniverse's citizen scientists with items that offer a wide range of difficulties. Using the data from the small subset of participants who responded to all items of the ZACS, we found evidence suggesting the ZACS is a reliable instrument (α=0.78), although twenty-one of its forty items appear to have point biserials less than 0.3. The work reported here provides significant insight into the strengths and limitations of various methods for administering assessments to citizen scientists. Researchers who wish to study the knowledge and abilities of citizen scientists in the future should be sure to design their research methods to avoid the pitfalls identified by our initial findings. © 2013 The American Astronomical Society.Planet Hunters. VI: An Independent Characterization of KOI-351 and Several Long Period Planet Candidates from the Kepler Archival Data

ArXiv 1310.5912 (2013)

Abstract:

We report the discovery of 14 new transiting planet candidates in the Kepler field from the Planet Hunters citizen science program. None of these candidates overlapped with Kepler Objects of Interest (KOIs) at the time of submission. We report the discovery of one more addition to the six planet candidate system around KOI-351, making it the only seven planet candidate system from Kepler. Additionally, KOI-351 bears some resemblance to our own solar system, with the inner five planets ranging from Earth to mini-Neptune radii and the outer planets being gas giants; however, this system is very compact, with all seven planet candidates orbiting $\lesssim 1$ AU from their host star. A Hill stability test and an orbital integration of the system shows that the system is stable. Furthermore, we significantly add to the population of long period transiting planets; periods range from 124-904 days, eight of them more than one Earth year long. Seven of these 14 candidates reside in their host star's habitable zone.Galaxy Zoo: Observing Secular Evolution Through Bars

ArXiv 1310.2941 (2013)

Abstract:

In this paper, we use the Galaxy Zoo 2 dataset to study the behavior of bars in disk galaxies as a function of specific star formation rate (SSFR), and bulge prominence. Our sample consists of 13,295 disk galaxies, with an overall (strong) bar fraction of $23.6\pm 0.4\%$, of which 1,154 barred galaxies also have bar length measurements. These samples are the largest ever used to study the role of bars in galaxy evolution. We find that the likelihood of a galaxy hosting a bar is anti-correlated with SSFR, regardless of stellar mass or bulge prominence. We find that the trends of bar likelihood and bar length with bulge prominence are bimodal with SSFR. We interpret these observations using state-of-the-art simulations of bar evolution which include live halos and the effects of gas and star formation. We suggest our observed trends of bar likelihood with SSFR are driven by the gas fraction of the disks; a factor demonstrated to significantly retard both bar formation and evolution in models. We interpret the bimodal relationship between bulge prominence and bar properties as due to the complicated effects of classical bulges and central mass concentrations on bar evolution, and also to the growth of disky pseudobulges by bar evolution. These results represent empirical evidence for secular evolution driven by bars in disk galaxies. This work suggests that bars are not stagnant structures within disk galaxies, but are a critical evolutionary driver of their host galaxies in the local universe ($z<1$).Morphology in the Era of Large Surveys

ArXiv 1310.0556 (2013)

Abstract:

The study of galaxies has changed dramatically over the past few decades with the advent of large-scale astronomical surveys. These large collaborative efforts have made available high-quality imaging and spectroscopy of hundreds of thousands of systems, providing a body of observations which has significantly enhanced our understanding not only of cosmology and large-scale structure in the universe but also of the astrophysics of galaxy formation and evolution. Throughout these changes, one thing that has remained constant is the role of galaxy morphology as a clue to understanding galaxies. But obtaining morphologies for large numbers of galaxies is challenging; this topic, "Morphology in the era of large surveys", was the subject of a recent discussion meeting at the Royal Astronomical Society, and this "Astronomy and Geophysics" article is a report on that meeting.Galaxy Zoo 2: detailed morphological classifications for 304,122 galaxies from the Sloan Digital Sky Survey

ArXiv 1308.3496 (2013)