A Herschel-ATLAS study of dusty spheroids: probing the minor-merger process in the local Universe

ArXiv 1307.8127 (2013)

Abstract:

We use multi-wavelength (0.12 - 500 micron) photometry from Herschel-ATLAS, WISE, UKIDSS, SDSS and GALEX, to study 23 nearby spheroidal galaxies with prominent dust lanes (DLSGs). DLSGs are considered to be remnants of recent minor mergers, making them ideal laboratories for studying both the interstellar medium (ISM) of spheroids and minor-merger-driven star formation in the nearby Universe. The DLSGs exhibit star formation rates (SFRs) between 0.01 and 10 MSun yr^-1, with a median of 0.26 MSun yr^-1 (a factor of 3.5 greater than the average SG). The median dust mass, dust-to-stellar mass ratio and dust temperature in these galaxies are around 10^7.6 MSun yr^-1, ~0.05% and ~19.5 K respectively. The dust masses are at least a factor of 50 greater than that expected from stellar mass loss and, like the SFRs, show no correlation with galaxy luminosity, suggesting that both the ISM and the star formation have external drivers. Adopting literature gas-to-dust ratios and star formation histories derived from fits to the panchromatic photometry, we estimate that the median current and initial gas-to-stellar mass ratios in these systems are ~4% and ~7% respectively. If, as indicated by recent work, minor mergers that drive star formation in spheroids with (NUV-r)>3.8 (the colour range of our DLSGs) have stellar mass ratios between 1:6 and 1:10, then the satellite gas fractions are likely >50%.Galaxy Zoo: Motivations of Citizen Scientists

ArXiv 1303.6886 (2013)

Abstract:

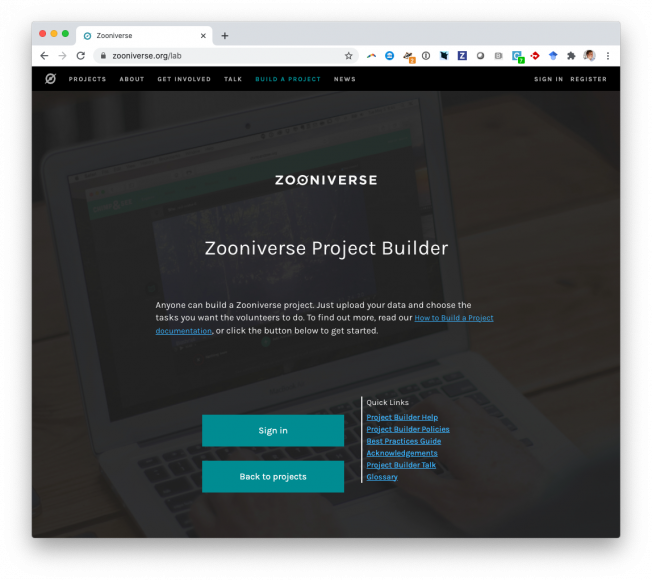

Citizen science, in which volunteers work with professional scientists to conduct research, is expanding due to large online datasets. To plan projects, it is important to understand volunteers' motivations for participating. This paper analyzes results from an online survey of nearly 11,000 volunteers in Galaxy Zoo, an astronomy citizen science project. Results show that volunteers' primary motivation is a desire to contribute to scientific research. We encourage other citizen science projects to study the motivations of their volunteers, to see whether and how these results may be generalized to inform the field of citizen science.Unproceedings of the Fourth .Astronomy Conference (.Astronomy 4), Heidelberg, Germany, July 9-11 2012

ArXiv 1301.5193 (2013)

Abstract:

The goal of the .Astronomy conference series is to bring together astronomers, educators, developers and others interested in using the Internet as a medium for astronomy. Attendance at the event is limited to approximately 50 participants, and days are split into mornings of scheduled talks, followed by 'unconference' afternoons, where sessions are defined by participants during the course of the event. Participants in unconference sessions are discouraged from formal presentations, with discussion, workshop-style formats or informal practical tutorials encouraged. The conference also designates one day as a 'hack day', in which attendees collaborate in groups on day-long projects for presentation the following morning. These hacks are often a way of concentrating effort, learning new skills, and exploring ideas in a practical fashion. The emphasis on informal, focused interaction makes recording proceedings more difficult than for a normal meeting. While the first .Astronomy conference is preserved formally in a book, more recent iterations are not documented. We therefore, in the spirit of .Astronomy, report 'unproceedings' from .Astronomy 4, which was held in Heidelberg in July 2012.Planet Hunters. V. A Confirmed Jupiter-Size Planet in the Habitable Zone and 42 Planet Candidates from the Kepler Archive Data

ArXiv 1301.0644 (2013)

Abstract:

We report the latest Planet Hunter results, including PH2 b, a Jupiter-size (R_PL = 10.12 \pm 0.56 R_E) planet orbiting in the habitable zone of a solar-type star. PH2 b was elevated from candidate status when a series of false positive tests yielded a 99.9% confidence level that transit events detected around the star KIC 12735740 had a planetary origin. Planet Hunter volunteers have also discovered 42 new planet candidates in the Kepler public archive data, of which 33 have at least three transits recorded. Most of these transit candidates have orbital periods longer than 100 days and 20 are potentially located in the habitable zones of their host stars. Nine candidates were detected with only two transit events and the prospective periods are longer than 400 days. The photometric models suggest that these objects have radii that range between Neptune to Jupiter. These detections nearly double the number of gas giant planet candidates orbiting at habitable zone distances. We conducted spectroscopic observations for nine of the brighter targets to improve the stellar parameters and we obtained adaptive optics imaging for four of the stars to search for blended background or foreground stars that could confuse our photometric modeling. We present an iterative analysis method to derive the stellar and planet properties and uncertainties by combining the available spectroscopic parameters, stellar evolution models, and transiting light curve parameters, weighted by the measurement errors. Planet Hunters is a citizen science project that crowd-sources the assessment of NASA Kepler light curves. The discovery of these 43 planet candidates demonstrates the success of citizen scientists at identifying planet candidates, even in longer period orbits with only two or three transit events.An introduction to the Zooniverse

AAAI Workshop - Technical Report WS-13-18 (2013) 103